Sad news coming out of Japan: workers at the nuclear power plants are stressed, sleep deprived and showerless (Hongo, 2011). In addition to having lost their own homes to the Tsunami, the workers at the nuclear plants have gone up to 10 days with rest or showers in trying to keep the stricken reactors cool. The nuclear industry is already a high risk industry and these workers are really being expected to perform in a superhuman manner; dealing with occupational catastrophe as well as personal tragedy. While most of the articles are being written about the nuclear risk, there is worker risk at a much higher dimension than usual. This is truly a tragic situation.

Archive for the ‘human error’ Category

Culture eats strategy for breakfast

In human error, Patient Safety, Safety climate on March 22, 2011 at 6:02 pmThe 2011 patient safety culture report is out from the AHRQ detailing the results from the survey of patient safety culture in over 1000 hospitals with almost half a million responders. There is some good news and some very concerning news. On the bright side, teamwork and supervisor attention to patient safety received high marks with 75-80% positive responses. Very concerning however are the two lowest scores: handoffs and hospital response to error.

The positive responses to the perception of safe handoffs came in at only 45% and the perception of a non-punitive response to error weighed in at 44%. Of particular concern, as expressed many times on this blog, is the perception of 56% of respondents that response to error is punitive! This perception has not improved AT ALL since the 2007 survey when data was collected from 382 hospitals.

For the New England region only this response to error survey item elicited a positive response in a paltry, scary 38% of respondents. 62% of those working in hospitals in New England perceive that there is a punitive approach to error!

What does this mean?

A culture in which staff perceive that they will be punished for making errors, creates secrecy and a reluctance to report incidents. This can lead to great patient harm. When you blame by the bad apple theory (the error involved a bad clinician) and punish the “bad apples,” the system goes unfixed making the next patient every bit as vulnerable as the one who was harmed by the “bad apple.” Unsafe conditions and near misses go under-reported creating a significant deficit for senior leaders who are trying to improve safety and quality for their organizations. As a senior leader, even if your policy is non-punitive, if the staff believe it is punitive their behavior will be risky.

To move away from a culture of blame, I suggest going to the Just Culture community website linked on this blog or reading “Behind Human Error” (2010) by Woods, Dekker, et al.

Checking Prescriptions

In human error, Patient Safety on March 19, 2011 at 11:31 amAnother resource for patients to be sure their prescriptions were filled accurately. It enhances safety to encourage patients to check this type of site. I would also advocate for all pharmacies to include pictures of the pills along with the drug information they provide.

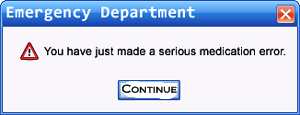

Enhancing resilience: Medication Error Recovery

In human error, Human Factors, Resiliency on November 13, 2010 at 5:48 pmBeyond Reason…to Resiliency

In High Reliability Orgs, human error, Human Factors, Normal Accident Theory on November 13, 2010 at 5:06 pmAn earlier post presented James Reason’s Swiss cheese model of failure in complex organizations. This model and the concept of latent failures are linear models of failure in that the failure is the result of one breakdown then another then another which all combined contribute to a failure by someone or something at the sharp end of a process.

More recent theories expand on this linear model and describe complex systems as interactive in that complex interactions, processes and relationships all interact in a non-linear fashion to produce failure. Examples of these are Normal Accident theory (NAT) and the theory of High Reliability Organizations (HRO). NAT holds that once a system becomes complex enough accidents are inevitable. There will come a point when humans lose control of a situation and failure results; such as in the case of Three Mile Island. In High Reliability Theory, organizations attempt to prevent the inevitable accident by monitoring the environment (St Pierre, et al., 2008). HRO look at their near misses to find holes in their systems; they look for complex causes of error, reduce variability and increase redundancy in the hopes of preventing failures (Woods, et al., 2010). While these efforts are worthwhile, this still has not reduced failures in organizations to an acceptable level. Sometimes double checks fail and standardization and policies increase complexity.

One of the new ways of thinking about safety is known as Resilience Engineering. … Read the rest of this entry »

A short list of Don’ts

In human error, Human Factors, Patient Safety on November 7, 2010 at 8:09 pmWhen the outcome of a process is known, humans can develop a bias when looking at the process itself. When anesthesiologists were shown a sequence of the same events, being told there was a bad outcome influenced their evaluation of the behavior they saw (Woods, Dekker, Cook, Johannesen & Sarter, 2010). This has shown to be true in other professions also. This tendency to see an outcome as more likely than it seemed in real time is known as hindsight bias. This is why many failures are attributed to “human error.” In actuality, the fact that many of these failures do not occur on a regular basis show that despite complexity, the humans are somehow usually controlling for failure. It is important to study the failure as well as the usual process that prevents failure.

When following up on a failure in a healthcare system, Woods, et al., (2010) recommend avoiding these simplistic but common reactions:

“Blame and train”

“A little more technology will fix it”

“Follow the rules”

Man versus System

In human error, Normal Accident Theory, Patient Safety, Safety climate on November 5, 2010 at 10:20 am